AI, machine learning, and Large Language Models (LLM) have been and still are a big thing with Intel, and in this year’s Innovation 2023, the chipmaker wasn’t shy to reaffirm that point. And as a kind of show of force, the chipmaker demonstrated how accurate the inferencing engine was by making a query that demonstrated just how accurate it was by searching for a particular point in its presentation.

To keep this very simple, Greg Lavender, Chief Technical Officer at Intel, gave a presentation of the inference language it developed to scrub through data that had been collected and made available both online and within Intel’s repository at the time, in order to find an exact moment within the presentation, using the chipmaker’s Gaudi2 AI-based GPU. For the record, Gaudi2 is a basically a direct competitor to NVIDIA’s previous generation A100 HPC GPU, both of which are technically designed for Machine Learning purposes and capabilities.

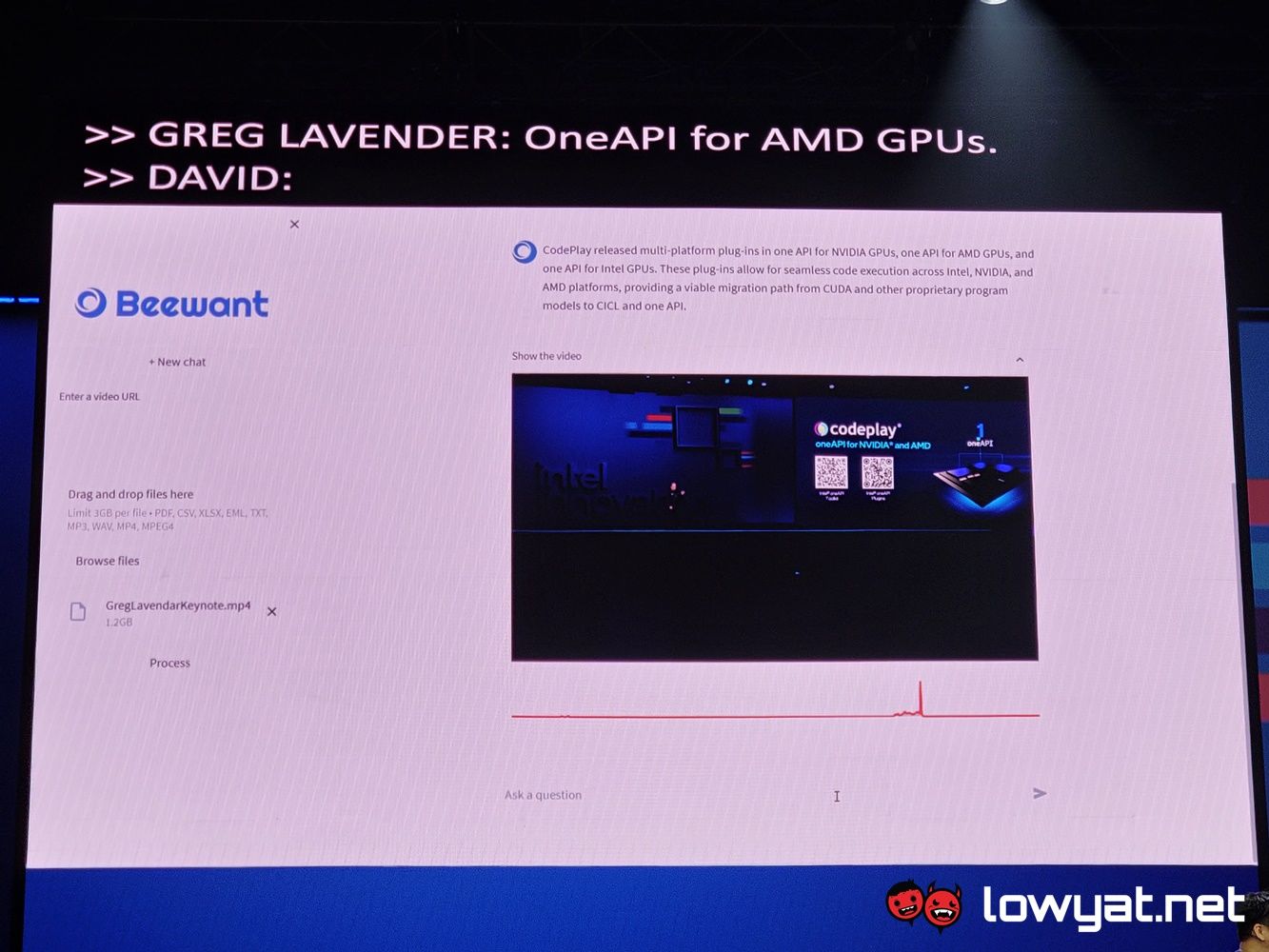

The platform that Intel used to perform the search was a program called Beewant – think ChatGPT but with an extra added focus on scrubbing through videos and images, beyond just text. All part of this generation of AI-driven content.

The end result with Gaudi2 is a search that allows Lavender to find a specific portion of his presentation of his Innovation keynote, right down to the exact second of his then ongoing keynote. While this may all seems simple, it is no less interesting and an impressive way of demonstrating the prowess of Intel’s own HPC GPU.

The post Intel’s Latest Inference Language Model And Gaudi2 GPU Is Stupid Accurate appeared first on Lowyat.NET.

0 Commentaires